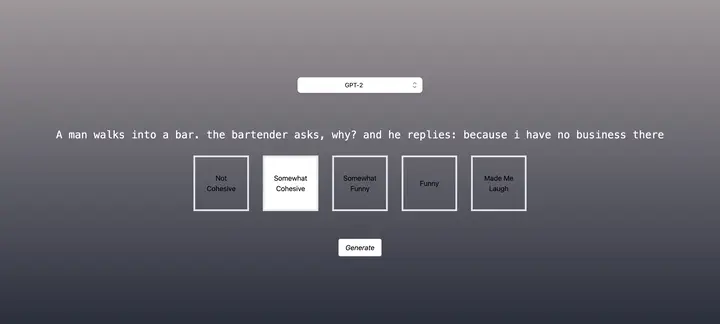

A joke generated by the GPT-2 model

A joke generated by the GPT-2 modelThe goal of our project is to generate cohesive, and humorous jokes through implementing modern developments in the field of text generation such as GPT Language Modelling and evaluating them using latest classifiers such as BERT . As an addition to our project, we have also implemented a N-gram joke generator model and a hate speech classifier which we use in order to distinguish jokes that may be offensive/insensitive to any given group of people.

We also built a user-friendly web application that collects user data on how funny a given joke is in hopes of using that data to retrain a better classifier and joke generator. We evaluated the F1 of the classifiers based on an eval set of jokes and we also measured the perplexity of the generators and compared to baseline models trained on our dataset. For future steps, we want to be able to host the generator models in real time, where we can use our fine tuned GPT model and update the model in real time based on normalized user feedback. Finally as stretch goals we would like to use BERT as an adversarial classifier to make a Generative Adversarial Network joke generator. We also want to use our implemented hate speech classifiers to filter out jokes before displaying them. The code is hosted at the following link: https://github.com/Richard2926/NLP-Final